What do Data Scientist, Business Users, Analytics and Casual Consumers all have in common? They want access to clean, related, pertinent data now. They want self-service. Most importantly, they don’t want restrictions or to wait on IT. Insert the latest data integration, data management, data abstraction platform here, because the latest and greatest isn’t legacy. It doesn’t take months to build and you can put it in the cloud where IT can’t touch it.

Guess what, every latest and greatest data platform of the last 30 years has started off this way (maybe not in the cloud, but you get the picture). Why? Because it solved a discreet business problem and it was free of the governing powers of IT. But then it happened. We needed to solve a second problem and a third and the cost to solve them individually became prohibitive.

Insert the Enterprise Data Warehouse, later labeled the Integrated Data Warehouse, or newly labeled the Monolithic Data Warehouse (this term is particularly painful, but this is the market’s current perception). Teradata has been particularly effective at espousing the concept of integrating data for reuse based on an economy of scale and ROI that data loaded once, reused many times provides an organization. And if we are truly honest with ourselves, it’s not that users don’t want clean, integrated, business contextual data. And the term Monolithic Data Warehouse isn’t even really a comment on what the benefits of a warehouse are. They are about the process, pain and effort it takes to build one.

Guess what, every latest and greatest data platform of the last 30 years has started off this way (maybe not in the cloud, but you get the picture). Why? Because it solved a discreet business problem and it was free of the governing powers of IT. But then it happened. We needed to solve a second problem and a third and the cost to solve them individually became prohibitive.

Insert the Enterprise Data Warehouse, later labeled the Integrated Data Warehouse, or newly labeled the Monolithic Data Warehouse (this term is particularly painful, but this is the market’s current perception). Teradata has been particularly effective at espousing the concept of integrating data for reuse based on an economy of scale and ROI that data loaded once, reused many times provides an organization. And if we are truly honest with ourselves, it’s not that users don’t want clean, integrated, business contextual data. And the term Monolithic Data Warehouse isn’t even really a comment on what the benefits of a warehouse are. They are about the process, pain and effort it takes to build one.

So what changed? Didn’t we solve a litany of business problems with the Enterprise Data Warehouse by making companies more efficient and enabling them to capture more market share? Yes, we did! But the underlying desires of data consumers never wavered. They still don’t want to wait. They still want their data now and they still want to be freed from the rigor of IT.

Now we have the moniker Modern Data Architecture to describe Cloud deployed data management and analytics solutions. If you read up on modern data architecture, a common thread amongst varying descriptions is that it is defined as “not legacy”. What exactly is legacy data architecture? Where did these terms (legacy data architecture, modern data architecture) come from? They came from Big Data Platform vendors and consultancies in order to differentiate Hadoop and Cloud Platforms from Data Warehouses. Obviously, legacy is bad and modern is good, right?

Here’s the rub… The Modern Data Architecture should, must include an integrated core to solve complex business problems. Solving a business problem that only traverses one subject area or one business line can be done on almost any platform. Solving the interesting business problems, the lucrative ones, require integrated data. Where does integrated data flourish? The Data Warehouse, not the Big Data Platform.

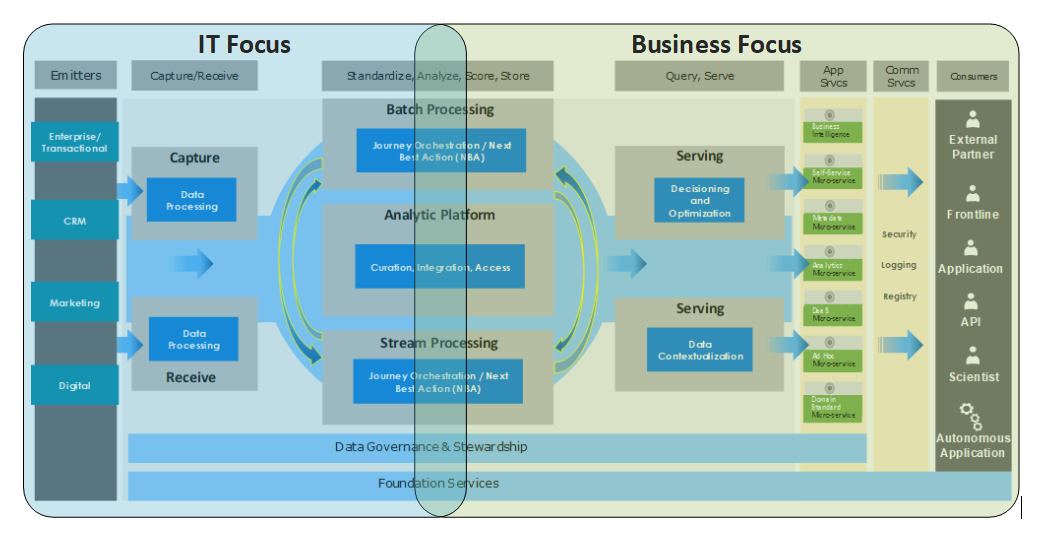

So how do we get there from here? We have Legacy Integrated Data that has been labelled too slow and too restrictive and Modern Data Lakes that commonly suffer from curation, security, usability and data duplication issues. The answer is looking at the problem from right to left, instead of left to right. IT is concerned with data management (the left side of the equation). And correctly so. Data management is the domain of tools, security and process. And the business is concerned with analytics and getting value from an organization’s data (the right side of the equation).

It is time to give the business exactly what they want… access, control, clean data, self-service and more. The answer is not one platform or one technology. The answer is an Analytical Ecosystem that is defined from the usage patterns of actual users rather than from the Legacy, Monolithic processes that built the Data Warehouse including business case lobbying, requirements workshops and months of testing (also known as requirements gathering phase 2).

How do you build something based on a usage pattern before it’s built? More importantly, how can you have a usage pattern if something doesn’t exist? You treat every satellite platform as an extension of your integrated core and start integrating data based on the business case of consolidating operationalized sandboxes, data marts, operational data stores and labs. There likely is enough data deployed in your ecosystem today to support most business problems individually, but not efficiently. The key is consolidating your fabric (the integration methods that hold your Analytic Ecosystem together) into a manageable, monitorable solution that IT can leverage to deploy the critical, highly reused data components to the integrated core.

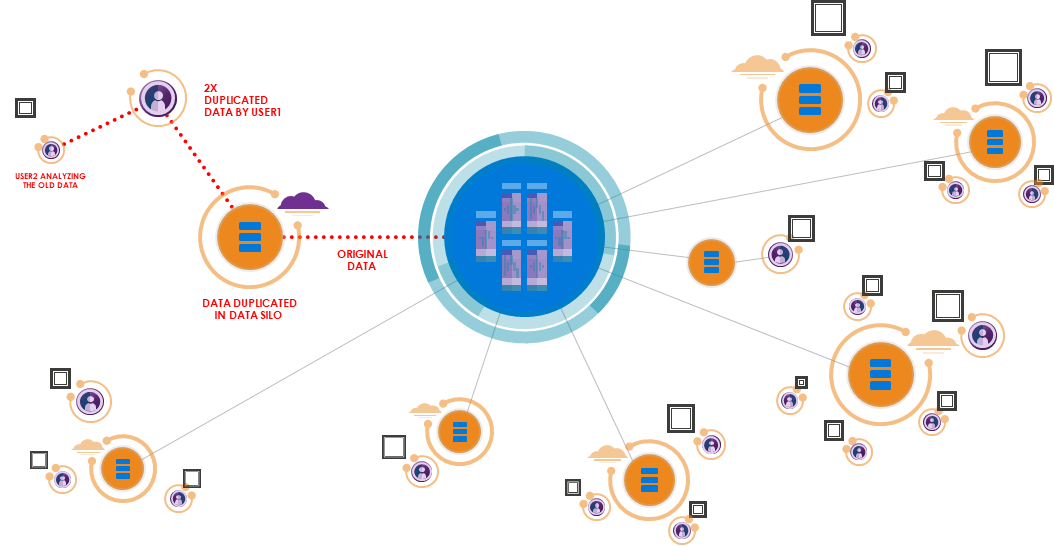

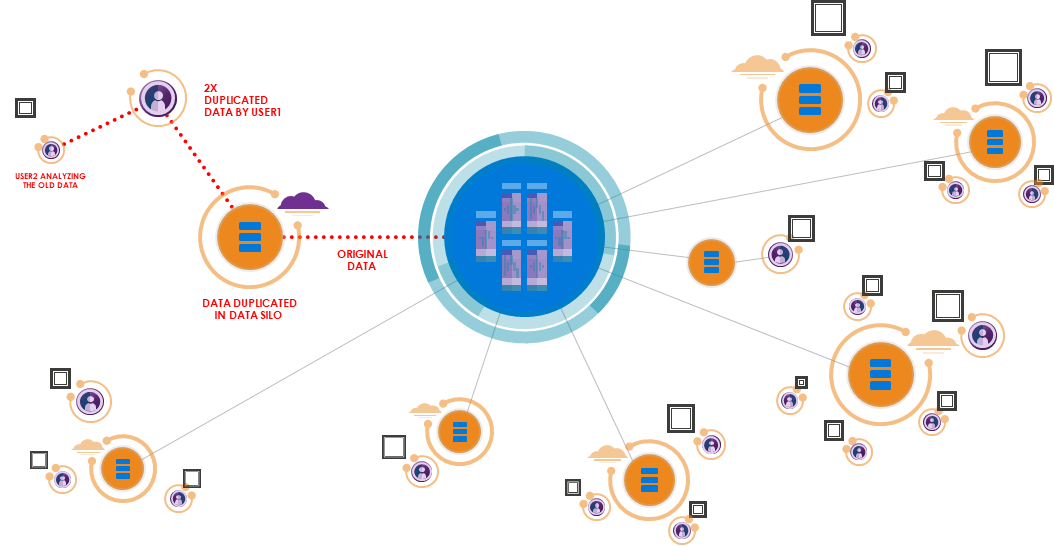

Above depicts the disparate data stores that complex organizations have. As data practitioners, we have done a good job of getting the 80% of data that should be curated and integrated into the Data Warehouse. What we haven’t done is make the data accessible and easy to extend for specific business use cases. The business fundamentally doesn’t want to wait any more. Our goal now is to enable self-service for data-centric business solutions via a connected fabric and look for opportunities to harvest curated, reusable data and bring it back to the core. This is facilitated by leveraging a fabric that enables virtual projections rather than physical duplicated data in each point solution.

Furthermore, ETL/ELT is not dead, but the death march to deploy each individual project into the integrated core is. IT, it is time to open the borders of the integrated core and ensure users have access. Let data consumers leverage the clean, curated data for all their discreet business problems. Have them leverage your data fabric to ensure they have a right time / right place projection of the data from your core to meet their business needs. Then it’s up to you to manage, monitor and put forth business cases to consolidate, rationalize data back into the core.

The Data Warehouse isn’t legacy. It is the core of the Modern Data Architecture.

Special thanks to the following contributors: Bob Montemurro, Mark Mitchell, Bill Falck, Jon Forinash and Berit Zidow.

Now we have the moniker Modern Data Architecture to describe Cloud deployed data management and analytics solutions. If you read up on modern data architecture, a common thread amongst varying descriptions is that it is defined as “not legacy”. What exactly is legacy data architecture? Where did these terms (legacy data architecture, modern data architecture) come from? They came from Big Data Platform vendors and consultancies in order to differentiate Hadoop and Cloud Platforms from Data Warehouses. Obviously, legacy is bad and modern is good, right?

Here’s the rub… The Modern Data Architecture should, must include an integrated core to solve complex business problems. Solving a business problem that only traverses one subject area or one business line can be done on almost any platform. Solving the interesting business problems, the lucrative ones, require integrated data. Where does integrated data flourish? The Data Warehouse, not the Big Data Platform.

So how do we get there from here? We have Legacy Integrated Data that has been labelled too slow and too restrictive and Modern Data Lakes that commonly suffer from curation, security, usability and data duplication issues. The answer is looking at the problem from right to left, instead of left to right. IT is concerned with data management (the left side of the equation). And correctly so. Data management is the domain of tools, security and process. And the business is concerned with analytics and getting value from an organization’s data (the right side of the equation).

It is time to give the business exactly what they want… access, control, clean data, self-service and more. The answer is not one platform or one technology. The answer is an Analytical Ecosystem that is defined from the usage patterns of actual users rather than from the Legacy, Monolithic processes that built the Data Warehouse including business case lobbying, requirements workshops and months of testing (also known as requirements gathering phase 2).

How do you build something based on a usage pattern before it’s built? More importantly, how can you have a usage pattern if something doesn’t exist? You treat every satellite platform as an extension of your integrated core and start integrating data based on the business case of consolidating operationalized sandboxes, data marts, operational data stores and labs. There likely is enough data deployed in your ecosystem today to support most business problems individually, but not efficiently. The key is consolidating your fabric (the integration methods that hold your Analytic Ecosystem together) into a manageable, monitorable solution that IT can leverage to deploy the critical, highly reused data components to the integrated core.

Above depicts the disparate data stores that complex organizations have. As data practitioners, we have done a good job of getting the 80% of data that should be curated and integrated into the Data Warehouse. What we haven’t done is make the data accessible and easy to extend for specific business use cases. The business fundamentally doesn’t want to wait any more. Our goal now is to enable self-service for data-centric business solutions via a connected fabric and look for opportunities to harvest curated, reusable data and bring it back to the core. This is facilitated by leveraging a fabric that enables virtual projections rather than physical duplicated data in each point solution.

Furthermore, ETL/ELT is not dead, but the death march to deploy each individual project into the integrated core is. IT, it is time to open the borders of the integrated core and ensure users have access. Let data consumers leverage the clean, curated data for all their discreet business problems. Have them leverage your data fabric to ensure they have a right time / right place projection of the data from your core to meet their business needs. Then it’s up to you to manage, monitor and put forth business cases to consolidate, rationalize data back into the core.

The Data Warehouse isn’t legacy. It is the core of the Modern Data Architecture.

Special thanks to the following contributors: Bob Montemurro, Mark Mitchell, Bill Falck, Jon Forinash and Berit Zidow.